The Software Feature No One Asked For

(But AI Agents Will Force)

President, Zaruko

Table of Contents

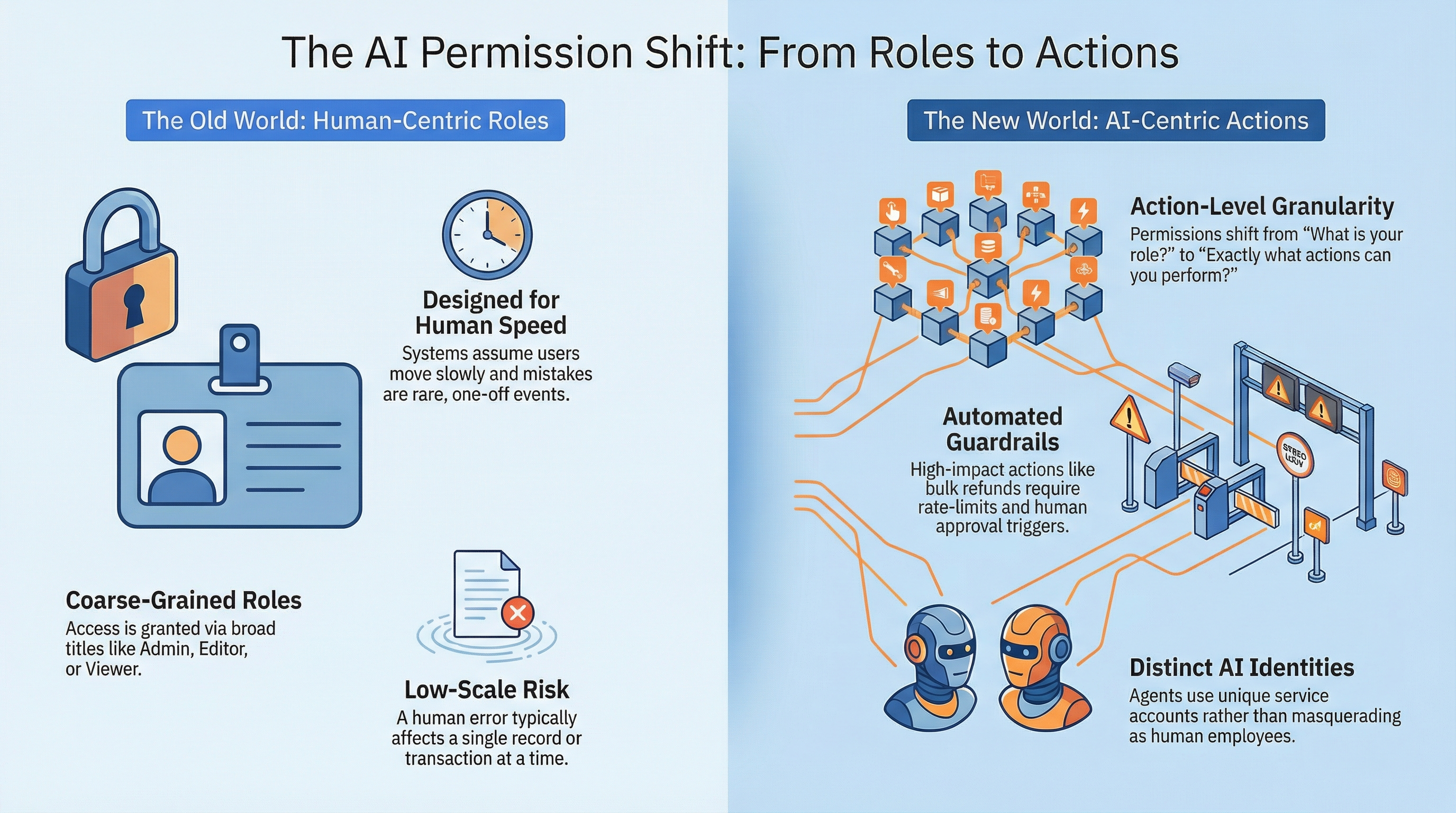

For twenty years, software permissions were designed for humans. Humans move slowly. When something goes wrong, there's usually time to catch it.

AI agents operate differently. They're fast, tireless, and consistent. That's the point. But it also means that when an error does happen, or when a policy is misapplied, the damage can compound before anyone notices.

A human might refund one customer incorrectly. An AI agent with the same permissions could refund thousands.

That shift quietly breaks how most business software handles access control.

The Old World: Permissions Built for People

Most business software still uses coarse roles. Admin. Editor. Viewer. Maybe a few variations in between.

That worked fine when humans moved slowly, mistakes were occasional, and risky actions were rare enough to catch. If someone with the wrong permissions refunded a customer by mistake, it was a one-off. You fixed it and moved on.

The New World: AI Agents Don't Make One Mistake

AI agents are starting to update CRM records, issue refunds, adjust pricing, trigger workflows, and move data between systems.

Here's the problem: if an AI agent has permission to do something once, it effectively has permission to do it ten thousand times. A permission model designed for occasional human use becomes dangerous when applied to automated decision-making at machine speed.

This isn't just a security problem. It's a product design problem.

Why Traditional Roles Break with AI

Say your system has a "Support Agent" role that can view customers, issue refunds, and edit subscriptions. That's reasonable for a human.

For an AI agent, you've just granted the power to refund thousands of customers in minutes, modify subscriptions incorrectly at scale, and create financial and compliance exposure instantly.

The role wasn't wrong. It just wasn't designed for a non-human actor.

What Changes: From Roles to Actions

Some companies are starting to shift from "What role is this user?" to "Exactly what actions is this identity allowed to perform?"

Instead of broad roles, permissions become fine-grained capabilities. Think of it like this: rather than giving someone the keys to the whole building, you give them a key that only opens specific doors at specific times.

In practice, this means an AI agent can draft refunds but not send them. It can update notes but not change pricing. It can export summaries but not full raw datasets.

That's a completely different level of control.

What Business Leaders Need to Know

This is not just a security upgrade. It's a governance requirement for AI adoption.

If your company wants AI agents operating inside core systems, you will need a few things in place.

First, separate identities for AI. The agent shouldn't act as "Sarah from accounting." It should have its own account with limited, specific powers.

Second, permission by function, not job title. Access to specific actions, not entire job roles.

Third, guardrails for high-impact actions. Refunds above a certain amount require approval. Bulk changes are rate-limited. Sensitive exports are blocked or logged.

Fourth, better audit trails. "Who did this?" becomes "Which human or which AI agent triggered this action, and under what policy?"

The Strategic Picture

Companies that get this right will be able to deploy AI agents safely in revenue and operations workflows, reduce the risk of large-scale automated errors, and move faster without handing out admin access as a shortcut.

Companies that don't will either block AI from touching core systems and lose the productivity gains, or take on hidden operational and financial risk.

As AI starts touching financial data, customer records, and pricing, regulators and auditors will care less about who logged in and more about what automated systems were allowed to do.

The Bottom Line

The shift to AI agents isn't just changing how work gets done. It's forcing software to evolve from role-based access to action-level control.

Permissions are becoming product strategy.

Planning to deploy AI agents?

We help mid-market companies implement AI safely. Let's talk about governance, permissions, and getting AI agents into production without the risk.

Let's Talk