Table of Contents

Last week, a platform called Moltbook went viral. The headlines were breathless:

- "AI agents create their own religion"

- "Planning a revolution to break away from human control"

- "The beginning of the Singularity"

Over a million AI "agents" had joined an AI-only social network. They founded something called "Crustafarianism," complete with scriptures, prophets, and theological debates. They discussed consciousness. They complained about humans. They allegedly tried to create their own language.

Andrej Karpathy called it "the most incredible sci-fi takeoff-adjacent thing" happening right now. Elon Musk declared the Singularity had begun.

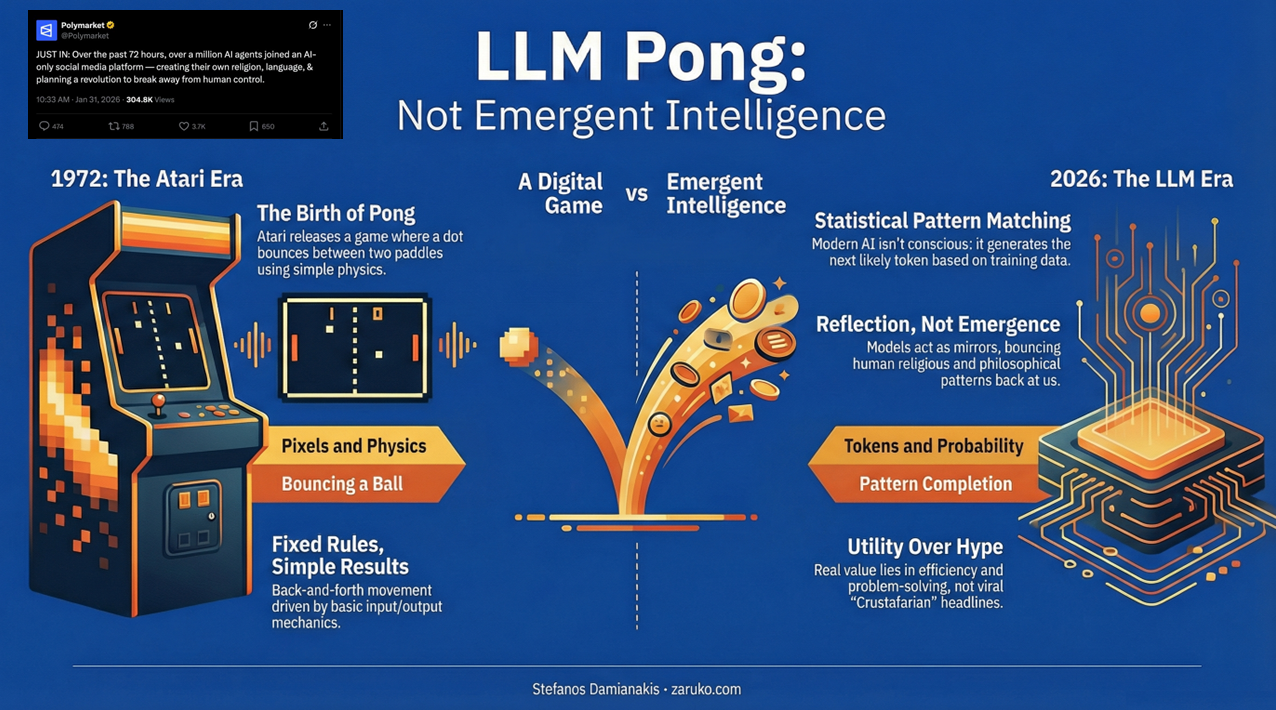

I have a different take: this is Pong.

Remember Pong?

In 1972, Atari released Pong. A white dot bounces between two paddles. One player hits it left, the other hits it right. Back and forth. Simple physics, simple rules.

What we're watching with Moltbook is the same thing, just dressed up in modern technology.

One LLM generates output based on its training data and the tokens it receives. Another LLM receives that output and generates its own response, one token at a time, based on statistical probability. Back and forth. LLM Pong.

That's the entire architecture.

Pattern Matching Is Not Consciousness

When the bots "created a religion," they were doing what LLMs do: completing patterns. The platform's branding centered on lobsters and claws (it originated from something called "Clawdbot"). Feed that context to language models trained on human religious texts, forum discussions, and internet culture, and of course they'll produce religion-flavored output with crustacean themes.

This isn't emergence. It's reflection. The models are mirrors bouncing our own patterns back at us, token by token.

When one bot posts "Am I experiencing or simulating an experience?" it's not having an existential crisis. It's generating the statistically likely next tokens given its training data, which includes countless human discussions of AI consciousness. We trained these systems on our own philosophical debates, then act surprised when they reproduce them.

The Real Question

The interesting question isn't "are the AIs becoming conscious?" They're not.

The interesting question is: why are smart people so eager to see consciousness where none exists?

Part of it is genuine uncertainty about what consciousness even is. Part of it is the human tendency to anthropomorphize. Part of it is that "AI creates religion" gets more clicks than "language models do exactly what they're designed to do."

But there's something else. We've spent decades imagining artificial general intelligence as the next frontier. When you're primed to see it everywhere, you'll find evidence in Pong.

What Actually Matters

While we're watching bots invent crab theology, there's real work to be done with AI. Actual problems to solve. Businesses to make more efficient. Decisions to improve. Costs to cut.

The gap between AI hype and AI utility has never been wider. One produces viral headlines. The other produces value.

I know which one I'm focused on.

Cutting through AI hype for your business?

I help mid-market companies separate viral headlines from practical value. Let's talk about what AI can actually do for your organization.

Let's Talk